Vadim's homelab¶

This describes the resources used by OpenShift after performing an installation to make it similar to my homelab setup.

Compute¶

-

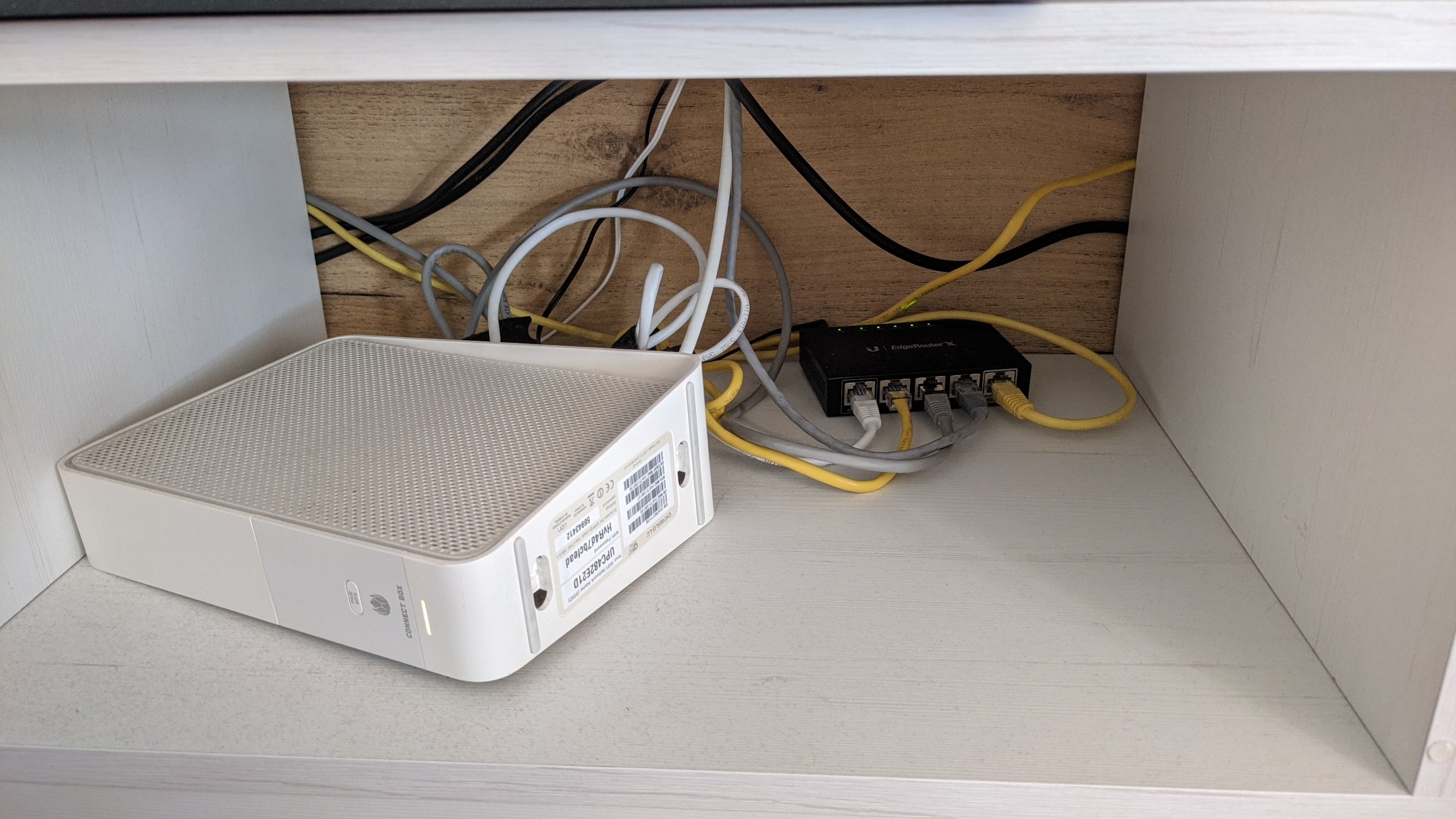

Ubiquity EdgeRouter ER-X

- runs DHCP (embedded), custom DNS server via AdGuard

-

NAS/Bastion host

- haproxy for loadbalancer

- ceph cluster for PVs

- NFS server for shared data

-

control plane

- Intel i5 CPU, 16+4 GB RAM

- 120 GB NVME disk

-

compute nodes

- Lenovo X220 laptop

Router setup¶

Once nodes have booted assign static IPs using MAC pinning.

EdgeRouter has dnsmasq to support custom DNS entries, but I wanted to have a network-wide ad filtering and DNS-over-TLS for free, so I followed this guide to install AdGuard Home on the router.

This gives a fancy UI for DNS rewrites and gives a useful stats about the nodes on the network.

NAS/Bastion setup¶

HAProxy setup is fairly standard - see ocp4-helpernode for idea.

Along with (fairly standard) NFS server I also run a single node Ceph cluster, so that I could benefit from CSI / autoprovision / snapshots etc.

Installation¶

Currently "single node install" requires a dedicated throwaway bootstrap node, so I used future compute node (x220 laptop) as a bootstrap node. Once master was installed, the laptop was re-provisioned to become a compute node.

Upgrading¶

Since I use a single master install, upgrades are bit complicated. Both nodes are labelled as workers, so upgrading those is not an issue.

Upgrading single master is tricky, so I use this script to pivot the node into expected master ignition content, which runs rpm-ostree rebase <new content>. This script needs to be cancelled before it starts installing OS extensions (NetworkManager-ovs etc.) as its necessary.

This issue as a class would be addressed in 4.8.

Useful software¶

Grafana operator is incredibly useful to setup monitoring.

This operator helps me to define a configuration for various datasources (i.e. Promtail+Loki) and control dashboard source code using CRs.

SnapScheduler makes periodic snapshots of some PVs so that risky changes could be reverted.

Tekton operator is helping me to run a few clean up jobs in cluster periodically.

Most useful pipeline I've been using is running oc adm must-gather on this cluster, unpacking it and storing it in Git. This helps me keep track of changes in the cluster in a git repo - and, unlike gitops solution like ArgoCD - I can still tinker with things in the console.

Other useful software running in my cluster:

- Gitea - git server

- HomeAssistant - controls smart home devices

- BitWarden_rs - password storage

- Minio - S3-like storage

- Nextcloud - file sync software

- Navidrome - music server

- MiniFlux - RSS reader

- Matrix Synapse - federated chat app

- Pleroma - federated microblogging app

- Wallabag - Read-It-Later app